Docker is a platform for containerization. In simple terms, it allows us to package an application and all its dependencies into a single, portable unit called a container

Docker is a container that can run on any OS (platform independent )

We can develop the entire application within the Docker container, and that container can run anywhere

example: developer1 developed his entire software in Docker container with PHP (Backend) , MySQl and JAva for both backend and front end developer 2 can get the container from developer 1 the docker container and run without any dependencies and without wasting their time on creating the environment .

- Portability:

- Docker containers ensure that applications run consistently across different environments, from development to production. This eliminates the "it works on my machine"

- Docker containers ensure that applications run consistently across different environments, from development to production. This eliminates the "it works on my machine"

- Resource Efficiency:

- Docker containers are lightweight compared to traditional virtual machines, allowing for better resource utilization.

- Isolation:

- Containers provide isolation, preventing applications from interfering with each other and enhancing security.

- Scalability:

- Docker simplifies scaling applications by allowing you to easily create and manage multiple container instances.

- Faster Deployment:

- Docker streamlines the deployment process, enabling faster and more consistent deployments.

- Consistency:

- Docker allows for the creation of consistent environments throughout the software development life cycle.

Disadvantages of Docker:

- Security Concerns:

- Docker containers share the host OS kernel, which can create security vulnerabilities if not properly managed.

- Vulnerabilities within Docker images themselves can also pose a threat.

- Complexity:

- Managing complex Docker environments, especially at scale, can be challenging.

- Orchestrating containers with tools like Kubernetes adds another layer of complexity.

- Performance Overhead:

- While lightweight, Docker containers do introduce some performance overhead compared to running applications directly on the host OS.

- Persistent Data Storage:

- Managing persistent data in Docker containers requires careful planning and configuration.

- Networking complexities:

- Setting up and managing complex network configurations between multiple containers can be difficult.

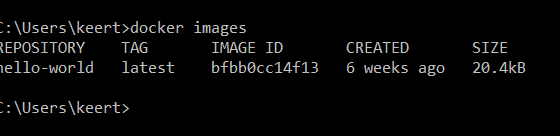

after installing and open

To understand docker :

Key Concepts:

- Dockerfile:

- A Dockerfile is a text file containing instructions for building a Docker image. It defines the base image, adds application code, sets environment variables, and specifies other configurations.

- Docker Registry:

- A Docker registry is a storage and distribution system for Docker images. Docker Hub is the most popular public registry, with a vast collection of pre-built images. You can also create private registries.

- Docker Hub:

- This is the default public registry. It contains a very large number of premade docker images.

Docker image is the temple contains everything to build the software , immutable , Docker images are built in layers. Each layer represents a set of changes. This layered architecture optimizes storage and makes image distribution efficient

# alphine is the liunx image available in docker we pull using FROM

FROM alpine:latest

# CMD is the command and echo is to display a line of text or strings that are passed to it as arguments

CMD["echo","Hello","Docker!"]

docker build:

- This is the core command that initiates the Docker image build process.

2. [OPTIONS]:

- These are optional flags that modify the build process. Some of the most frequently used options are:

-t, --tag name[:tag]:- Assigns a name and optional tag to the image.

- Example:

docker build -t my-app:latest .(names the image "my-app" and tags it "latest").

-f, --file PATH/Dockerfile:- Specifies the path to the

Dockerfile. If not provided, Docker assumes theDockerfileis in the build context directory. - Example:

docker build -t my-app:v1 -f ./my-dockerfiles/Dockerfile .

- Specifies the path to the

--no-cache:- Disables the use of cached intermediate layers during the build. This is useful for ensuring a fresh build.

- Example:

docker build --no-cache -t my-app:latest .

--build-arg <varname>=<value>:- Sets build-time variables.

- Example:

docker build --build-arg VERSION=1.2.3 -t my-app .

3. PATH | URL | -:

- This specifies the build context:

PATH: A local path to a directory containing theDockerfileand any files theDockerfileneeds. The.(dot) represents the current directory.URL: A Git repository URL.-: Reads theDockerfilefrom standard input.

Comments

Post a Comment